Easily Manage Large Datasets in Laravel with LazyCollection

Easily Manage Large Datasets in Laravel with LazyCollection

When dealing with large datasets in web applications, managing memory and processing efficiently becomes a critical challenge. Laravel's **LazyCollection** provides an elegant solution to this problem by enabling developers to work with massive datasets without overwhelming system resources. In this comprehensive guide, we will explore how to leverage LazyCollection to handle large datasets in Laravel with practical examples.

1. Introduction to LazyCollection

LazyCollection is a feature introduced in Laravel 6.x that allows you to process large datasets in a memory-efficient way. Unlike regular collections that load all data into memory, LazyCollection processes data incrementally, using generators to stream data in chunks.

Key Features

- Incremental data processing

- Minimal memory usage

- Support for working with large files or database queries

LazyCollection is particularly useful for tasks like:

- Reading and processing large CSV files

- Handling paginated API responses

- Streaming data from a database

2. How LazyCollection Differs from Regular Collections

Regular Collection

A regular collection loads all items into memory at once:

use Illuminate\Support\Collection;

$collection = Collection::make(range(1, 1000000));This approach is memory-intensive and can cause the application to crash when handling very large datasets.

LazyCollection

A LazyCollection uses generators to load data one item at a time:

use Illuminate\Support\LazyCollection;

$lazyCollection = LazyCollection::make(function () {

for ($i = 1; $i <= 1000000; $i++) {

yield $i;

}

});With LazyCollection, only one item is in memory at any given time, making it ideal for processing large datasets.

3. Setting Up a Laravel Project

If you don't already have a Laravel project, create one:

composer create-project --prefer-dist laravel/laravel large-dataset-demoNavigate to the project directory:

cd large-dataset-demo4. Processing Large Datasets with LazyCollection

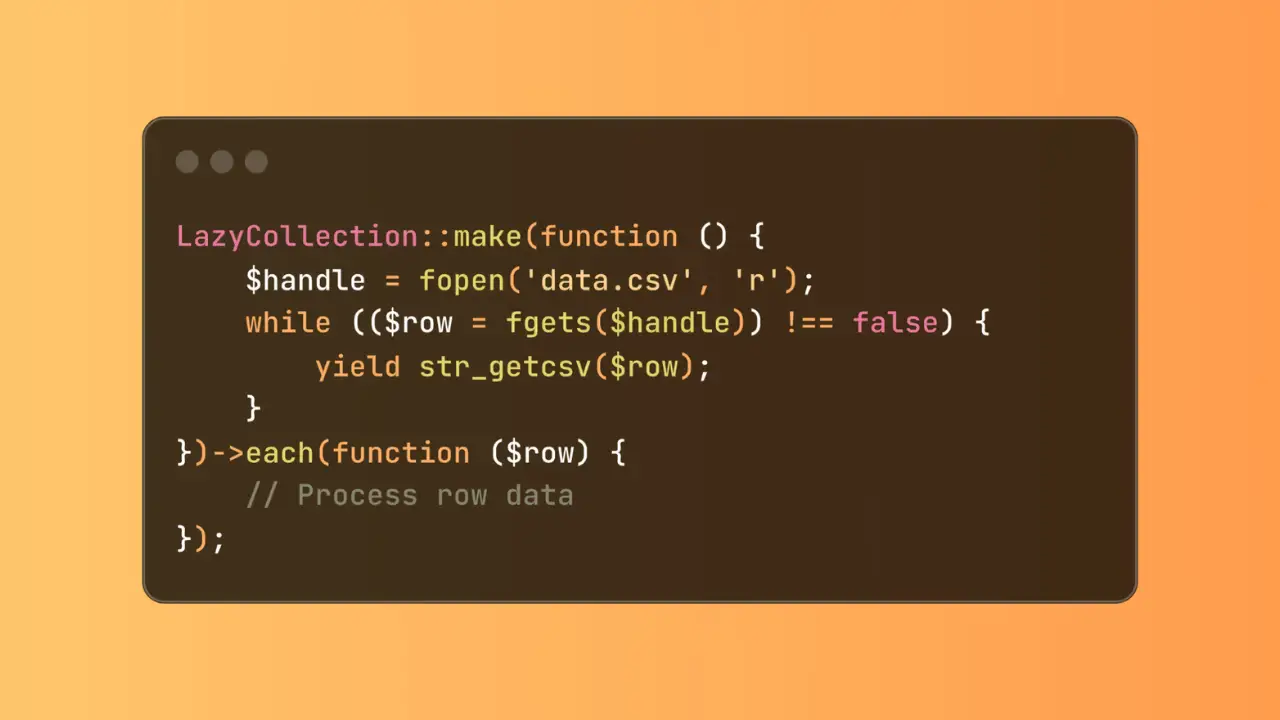

Example: Reading a Large File

LazyCollection can be used to read large files line by line without loading the entire file into memory:

use Illuminate\Support\LazyCollection;

LazyCollection::make(function () {

$handle = fopen('large-file.csv', 'r');

while (($line = fgetcsv($handle)) !== false) {

yield $line;

}

fclose($handle);

})->each(function ($line) {

// Process each line

print_r($line);

});This approach ensures minimal memory usage regardless of the file size.

5. Practical Examples with Code

5.1 Processing Large Database Queries

Using LazyCollection with database queries prevents loading all rows into memory at once:

use App\Models\User;

use Illuminate\Support\LazyCollection;

LazyCollection::make(function () {

foreach (User::cursor() as $user) {

yield $user;

}

})->each(function ($user) {

echo $user->name . "\n";

});5.2 Chunking Data for Batch Processing

LazyCollection allows you to process data in chunks:

use App\Models\Order;

use Illuminate\Support\LazyCollection;

Order::lazy()->chunk(1000)->each(function ($orders) {

foreach ($orders as $order) {

// Process each order

echo $order->id . "\n";

}

});5.3 Generating Large Data Streams

LazyCollection can generate large data streams for APIs:

use Illuminate\Support\LazyCollection;

Route::get('/large-dataset', function () {

return LazyCollection::make(function () {

for ($i = 1; $i <= 1000000; $i++) {

yield [

'id' => $i,

'value' => 'Item ' . $i

];

}

});

});6. Performance Optimization Tips

Use Database Indexing

Ensure your database queries are optimized with proper indexing to improve data retrieval speed.

Optimize Chunk Size

Choose an appropriate chunk size based on your application's memory and processing capacity. Test different sizes to find the optimal value.

Avoid Eager Loading

When processing large datasets, avoid unnecessary eager loading of relationships to reduce memory usage.

Use Queues for Long-Running Tasks

For extensive operations, offload processing to Laravel queues to improve user experience and system responsiveness.

7. Use Cases for LazyCollection

LazyCollection is versatile and can be applied to various scenarios, such as:

- **ETL (Extract, Transform, Load)**: Stream data from one source, transform it, and load it into another.

- **Log File Analysis**: Analyze large log files line by line.

- **Data Migration**: Move large datasets between databases without downtime.

- **API Data Aggregation**: Fetch and process paginated data from APIs.

Conclusion

Laravel's LazyCollection is a powerful tool for managing large datasets efficiently. By processing data incrementally, it reduces memory consumption and enables seamless handling of massive datasets in real-world applications. Whether you're working with files, databases, or APIs, LazyCollection is an essential feature for optimizing performance in Laravel applications.

Start experimenting with LazyCollection in your projects and unlock its potential to handle big data effortlessly.

0 Comments

No Comment Available